How to Build a Troubleshooting Flow for Faulty Diagnostic Steps

To build a fault-tolerant troubleshooting flow, map every diagnostic step and its failure modes, then design modular checks that isolate faults and guide rapid, evidence-based recovery. Define clear assumptions, preconditions, and decision gates with guardrails, thresholds, and timeouts. Add explicit checks for false positives/negatives and track sensitivity and specificity. Use iterative testing, documentation, and traceability to refine the process, measure performance, and drive continuous improvement. If you keep going, you’ll uncover how to translate this into actionable flowcharts.

Designing a Fault-Tolerant Diagnostic Flow

Designing a fault-tolerant diagnostic flow starts with mapping the entire diagnostic process and identifying where failures could occur. You then define failure modes, selection criteria, and graceful fallback paths to preserve usefulness under uncertainty. Ground your approach in fault tolerance principles: redundancy where critical, independent checks, and clear escalation if results diverge. You’ll emphasize diagnostic accuracy by aligning tests to measurable outcomes, minimizing false positives and negatives through tiered validation. Document each step with rationale, expected data, and decision thresholds, so you can audit performance and retrace errors. Build modular components that isolate faults, allowing rapid substitution without cascading effects. Implement automatic cross-checks, timeouts, and sanity verification to detect anomalous readings early. Maintain a conservative bias toward preserving user autonomy while constraining risk, ensuring the system remains trustworthy under varying conditions. Finally, pilot the flow against realistic scenarios, collect evidence, and iterate to improve fault tolerance and diagnostic accuracy.

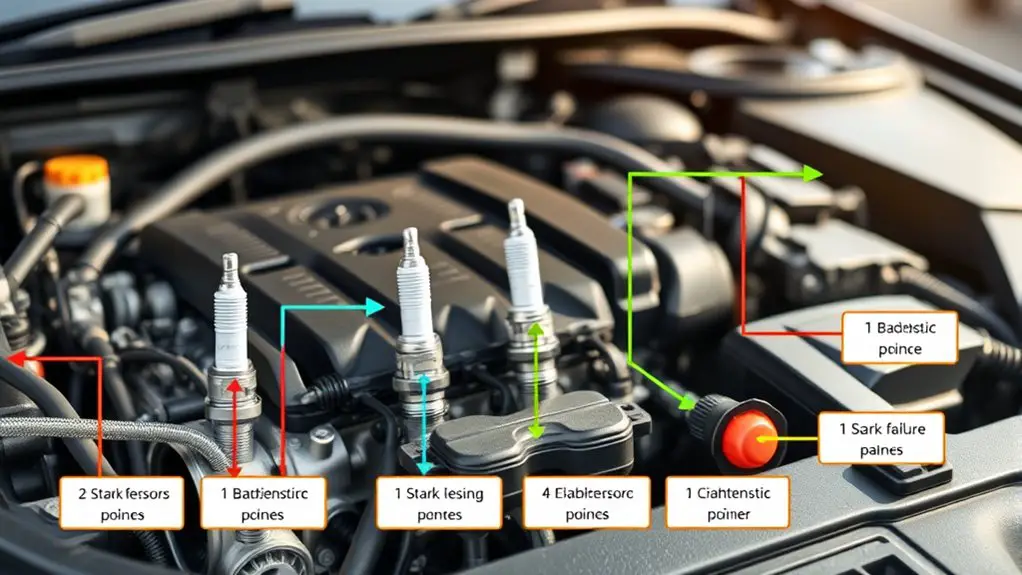

Mapping Decision Points and Guardrails

Mapping decision points and guardrails involves pinpointing where choices must be made and where safeguards should intervene. You’ll map the flow by isolating critical junctures where a decision tree should route outcomes, and where error handling must trigger corrective actions. Focus on traceable, testable shifts: input signals, validation checks, and recovery paths. Each decision node should balance autonomy and oversight, enabling you to proceed when data is clear while pausing when uncertainty rises. Guardrails must define thresholds, timeouts, and escalation criteria so faults are contained without stifling progress. Document expected states, possible deviations, and the evidence supporting each pathway; include verifiable checksums or logs to justify redirects. Apply iterative review cycles: simulate faults, measure decision latency, and adjust guardrails to reduce misrouting. Confirm readability and reproducibility, so teams can audit decisions later. This approach harmonizes freedom with accountability, sustaining robust diagnostic flow through disciplined decision trees and resilient error handling.

Defining Clear Assumptions and Preconditions

Clear assumptions and preconditions anchor reliable diagnostics; when you state them upfront, you reduce ambiguity and streamline decision points. You’ll want to define what must be true before testing begins, and specify what you will not assume. This creates a stable baseline for evaluation and prevents drift as you gather evidence. Focus on assumption clarity: articulate the minimal conditions that must hold for each diagnostic step to be valid. Pair this with precondition specifics, detailing required data, environment, and tools. Document the rationale briefly so others understand why these conditions matter. Keep language precise and checkable: use observable criteria, not opinions. As you progress, revisit assumptions only when evidence contradicts them, then adjust deliberately. This disciplined upfront work preserves a sense of freedom by removing guesswork and enabling you to pursue informative paths confidently. The outcome is a repeatable, auditable diagnostic flow grounded in clear assumptions.

Building Checks to Catch False Positives and Negatives

To guard diagnostics against misreads, you should implement checks that detect false positives and false negatives early in the flow; these checks should be explicit, testable criteria tied to the assumptions you stated upfront. You’ll design criteria that map directly to known signals, thresholds, and expected behaviors, then validate each with independent data or replicas. False positive checks separate noise from signal by requiring concordance across alternative indicators and time-delayed confirmation. Negative result validations confirm absence of effects only after controls prove stability and invariance. Document acceptance criteria, failure modes, and recovery steps so you can audit decisions later. Build guardrails that force retesting under borderline conditions, and require cross-checks from at least two independent methods before advancing. Track metrics for sensitivity, specificity, and false discovery rates; use those metrics to refine thresholds iteratively. This disciplined approach preserves autonomy while reducing misreads and unintended biases in your diagnostic flow.

Implementing Iterative Testing and Validation

You implement iterative test cycles to rapidly surface inconsistencies and confirm fixes across multiple passes. Each validation milestone should be clearly defined, measurable, and linked to specific failure modes so you can judge progress objectively. You’ll adjust tests based on evidence from prior cycles, documenting learnings to tighten the next round.

Iterative Test Cycles

Iterative test cycles involve repeatedly running targeted checks to refine fault hypotheses and validate fixes. You approach each cycle with clear objectives, measurable criteria, and documented observations. Start by formulating a concise hypothesis, then execute focused tests that challenge or support it. After each test, capture results, assess deviations, and adjust your hypothesis or implementation accordingly. Emphasize rapid feedback loops: small, reversible steps that reveal cause-and-effect relationships without overcommitting time or resources. Maintain traceability between tests and outcomes so you can reproduce or backtrack if needed. Prioritize data over assumptions, and document decisions with objective evidence. This disciplined cadence builds confidence, accelerates learning, and preserves autonomy by showing exactly what changed, why, and what happened next.

Validation Milestones

Validation milestones anchor iterative testing and validation, ensuring progress is measurable and traceable. You’ll define short, objective validation criteria for each phase, then execute cycles that reveal gaps without overreacting to anomalies. Treat each milestone checkpoint as a concrete decision point: pass, revise, or revert. Document results succinctly, linking outcomes to the criteria established upfront. Maintain a transparent audit trail so teammates can reproduce conclusions and verify improvements. Favor small, incremental tests over large, speculative runs to minimize risk and ambiguity. When a milestone is met, advance with a documented confidence assessment and a clear next target. If criteria aren’t met, adjust assumptions and reset the test design promptly. This disciplined cadence sustains freedom through measurable progress and accountable validation.

Documenting the Flow and Maintaining Traceability

Documenting the flow and maintaining traceability are essential for diagnosing faulty outcomes: you should map each step of the diagnostic process, capture decisions and their rationale, and attach evidence to the corresponding actions. You’ll establish clear links between hypotheses, tests, results, and conclusions, enabling rapid review and accountability. This discipline supports freedom through transparency and repeatability, not rigidity.

- Create a concise flow map that labels each diagnostic action, its purpose, and expected outcomes.

- Attach decisions to supporting evidence, noting alternative paths considered and why they were set aside.

- Maintain document control and version history, so changes, authors, and timestamps are visible.

- Preserve an auditable trail from initial symptom to final disposition, ensuring future investigators can rebuild the reasoning.

This approach reduces ambiguity, accelerates fault isolation, and sustains trust in the process.

Measuring Success and Continuous Improvement

Measuring success and continuous improvement begins with defining clear, objective metrics that align with diagnostic goals and organizational standards; by quantifying performance, you can rapidly identify gaps, track progress, and prioritize improvements. You’ll establish performance indicators that reflect diagnostic accuracy, time to resolution, and recurrence rates, ensuring they’re scalable across teams. Use data-driven targets, documented baselines, and regular reviews to maintain accountability without stifling initiative. Implement feedback loops that convert observations into actionable adjustments, enabling you to pivot strategies as needed. Track both process measures (cycle time, step adherence) and outcome measures (correct diagnoses, customer impact) to balance efficiency with quality. Transparently share dashboards and learnings to empower continuous iteration, while preserving autonomy. Ground improvements in repeatable experiments, A/B testing, and post-mortems, so you can prove causal effects of changes. In short, measure, learn, and adapt with disciplined, forward-looking rigor.

Frequently Asked Questions

How Can Biases Affect Diagnostic Decisions and How to Mitigate Them?

Irony: you think biases don’t touch your choices, yet cognitive biases quietly steer your decisions. You’ll see how they distort evidence, anchor judgments, and 焦点 drift in diagnostic steps. You can counter them with deliberate decision making strategies: preregister hypotheses, seek disconfirming data, use checklists, and compare alternatives. By documenting reasoning, you improve reliability. You deserve freedom in this process, so practice structured reflection, seek peer review, and continually test assumptions against outcomes.

What Tools Best Visualize Complex Decision Pathways for Teams?

You’ll want visualization techniques and decision trees to map complex decision pathways for teams. Start by outlining outcomes, then layer steps, checks, and dependencies so everyone sees cause and effect clearly. Use decision trees to reveal branches, probabilities, and risks, and pair them with visual dashboards for real-time updates. Keep it simple, actionable, and collaborative—empower your colleagues to question assumptions, test scenarios, and align on next steps with evidence-based clarity.

How to Handle Non-Reproducible Failures in Troubleshooting Flows?

In short, non reproducible failures demand disciplined tracing and flexible, repeatable steps. Imagine a ship at dusk: you chase the faint currents, document every sensor reading, and standardize inquiry paths so wind shifts don’t derail you. Your troubleshooting strategies rely on reproducible tests, logs, time-stamped snapshots, and cross‑team reviews. You’ll prioritize hypothesis fencing, controlled experimentation, and post-mortems to convert elusive bugs into actionable insights, while preserving your freedom to adapt.

When to Retire or Overhaul an Entire Diagnostic Model?

You should retire or overhaul when the diagnostic model consistently fails retirement criteria, and evidence shows persistent, unfixable gaps. If performance dips below threshold across multiple metrics, or you can’t justify fixes with data, initiate a diagnostic overhaul. Document failure modes, cost-benefit, and risk. Plan phased retirement to preserve continuity. You’ll gain clarity, control, and freedom by replacing brittle steps with validated, transparent logic, then revalidate against real-world scenarios. Maintain rigorous review throughout.

How to Balance Speed and Accuracy in Iterative Tests?

Speed and accuracy balance comes from structured iterative testing: start with a quick, low-cost check, then narrow your focus as signals clarify. You’ll maximize diagnostic efficiency by measuring results, documenting each step, and cutting tests that don’t move the needle. Use early stopping when confidence thresholds are met, and reserve deeper tests for uncertain cases. This disciplined approach supports freedom in exploration while delivering reliable decisions under uncertainty.