How to Build a Troubleshooting Flow for Misleading Sensor Readings

To build a troubleshooting flow for misleading sensor readings, start by defining the scope, objectives, and decision gates you’ll use to judge success. Establish data integrity checks, calibration schedules, and validation rules that are versioned and auditable. Detect anomalies with clear thresholds and require corroboration across related channels. Isolate root causes by aligning timestamps, reproducing conditions, and ruling out environmental or wiring issues. Implement corrective actions with accountable owners and preventive controls; continuous improvement will reveal deeper insights as you progress, and you’ll gain momentum.

Defining the Scope of the Troubleshooting Flow

Defining the scope of the troubleshooting flow establishes what we’ll diagnose, how we’ll measure success, and which components are in or out of scope. You’ll set boundaries that prevent drift and keep improvements focused on meaningful outcomes. Start with a clear scope definition: identify which sensors, channels, and time windows are actionable, and specify excluded elements to avoid scope creep. Next, articulate troubleshooting objectives that align with user needs and system reliability goals. Your objectives should be measurable, observable, and time-boxed, such as reducing false readings by a specified percentage or increasing down-time detection accuracy. Document success criteria, data sources, and the decision gates that determine progression or termination. Maintain traceability by linking each objective to concrete signals, thresholds, and validation checks. This disciplined framing empowers rapid iteration while preserving freedom to adapt methods. By defining scope and objectives upfront, you create a concise, data-driven foundation for the flow.

Establishing Data Integrity Checks and Validation Rules

To guarantee trust in sensor readings, you’ll establish data integrity checks and validation rules that run continuously and are transparently documented. You’ll implement a disciplined framework that explains what is checked, how it’s checked, and why it matters, so you can act with confidence. Use measurable criteria and repeatable processes to minimize ambiguity.

- Data validation criteria are specified, versioned, and independently tested before deployment.

- Sensor calibration schedules are defined, logged, and periodically verified against reference standards.

- Boundary conditions and tolerance bands are codified, with automatic alerts when limits are breached.

- Audit trails capture every change, drift, and adjustment, ensuring traceability and accountability.

Detecting Anomalies and Flagging Suspect Readings

You’ll establish clear anomaly detection triggers and set objective thresholds to distinguish genuine shifts from noise. When readings exceed or deviate from these baselines, flag them as suspect for further validation and review. This structured approach keeps detection transparent and data-driven, enabling timely investigation of potential sensor issues.

Anomaly Detection Triggers

Anomaly detection triggers are the rules that decide when a reading deviates from expected behavior and should be flagged for review. You implement objective thresholds, trend analysis, and consistency checks to separate noise from meaningful signals. By design, these triggers balance sensitivity with usability, reducing false alerts while catching real issues. Use clear criteria and document reasoning for auditability.

- Define stable baselines

- Track short- and long-term trends

- Confirm with calibration checks and sensor drift tests

- Require corroboration across related channels

When triggers fire, you escalate for review, not immediate rejection. You update models with new data and continuously refine thresholds. This disciplined approach preserves autonomy while maintaining reliability, so you keep moving toward freedom through transparent, repeatable methods.

Flagging Suspicious Readings

Flagging suspicious readings involves applying objective anomaly signals to identify data points that warrant review. You’ll establish thresholds, compute residuals, and compare against baselines derived from trusted data sources. When a value breaches criteria, tag it for inspection rather than auto-acting on it, preserving human judgment. Track suspicious trends over time, not just isolated outliers, to distinguish random noise from systematic drift. Validate signals against multiple data sources, and require corroboration before escalation. Document decisions, including the rationale for flagging and any retests conducted. Maintain a transparent audit trail so teams can reproduce the flow. Balance rigor with flexibility, allowing operators to adjust sensitivity as scenarios change. This approach supports freedom by enabling informed, data-driven choices without surrendering control to opaque automation.

Root Cause Isolation: Techniques and Decision Points

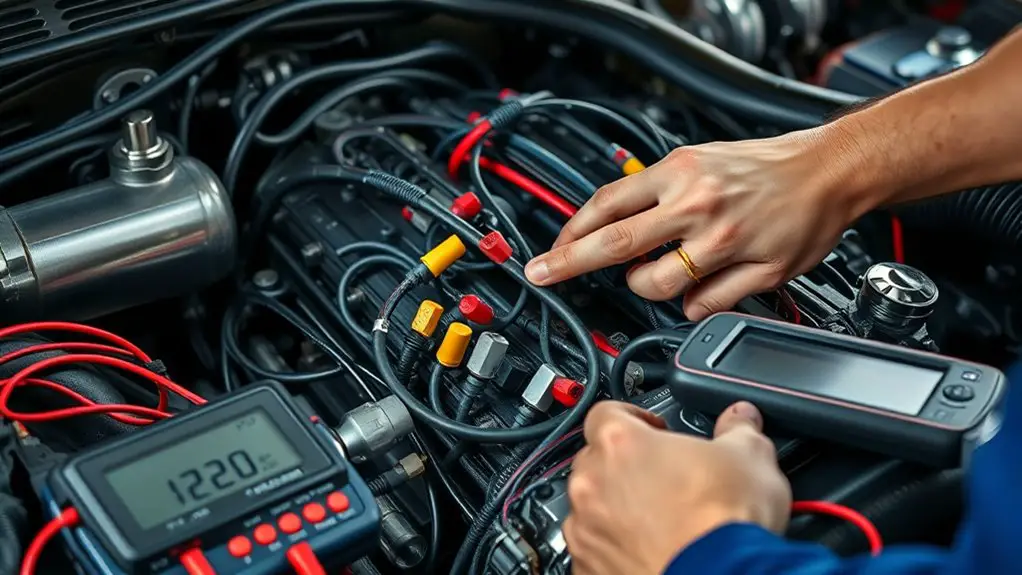

Root cause isolation hinges on a disciplined, data-driven approach that quickly distinguishes true faults from transient noise and sensor artifacts. You’ll apply structured reasoning to separate symptom from cause, using repeatable checks and quantified evidence. This is root cause analysis in practice, not guesswork, and it supports lean, effective troubleshooting techniques.

- Align measurements: synchronize timestamps, verify units, and confirm calibration status to guarantee data integrity.

- Reproduce under controlled conditions: replicate readings, isolate variables, and document deviations with precise thresholds.

- Compare with baselines: benchmark against historical trends, sensor health indicators, and known failure modes to spot anomalies.

- Apply elimination reasoning: rule out environmental, wiring, and software factors before accepting a fault hypothesis.

Decision points center on data sufficiency, testability, and risk of false positives. You’ll decide when to escalate, patch, or rerun with refined instrumentation, always prioritizing clarity and verifiable evidence.

Corrective Actions, Documentation, and Preventive Measures

You’ll outline corrective actions with clear criteria, responsibilities, and success metrics to guarantee prompt, verifiable recovery. You’ll document the incident timeline, actions taken, and outcomes using standardized formats to enable traceability and auditability. You’ll define preventive measures as data-driven controls and updates to procedures to reduce recurrence, then monitor their effectiveness and adjust as needed.

Corrective Actions Overview

Corrective actions provide a clear, actionable path to resolve sensor reading discrepancies, document what happened, and implement safeguards to prevent recurrence. You’ll pursue corrective measures with a disciplined approach, emphasizing traceability, reproducibility, and efficiency. Data-informed decisions guide each step, from root-cause hypotheses to verification outcomes, ensuring integrity of the flow.

- Inspect sensor outputs and compare with baseline to identify Deviations in a controlled, repeatable manner.

- Calibrate or recalibrate sensors as needed, ensuring proper sensor calibration methodology and documented acceptance criteria.

- Implement containment and rapid correction measures to prevent erroneous readings from propagating downstream.

- Validate results with independent checks, logging all changes and preparing a concise summary for stakeholders.

This mindset supports freedom through clarity, rigor, and responsible risk management.

Documentation Best Practices

Documentation best practices for corrective actions, documentation, and preventive measures emphasize clear record-keeping, traceable decisions, and verifiable outcomes. You’ll structure findings with objective notes, timestamps, and source references to support reproducibility. Use concise summaries, followed by data-backed evidence, to justify each action. Adopt standardized documentation formats that promote consistency across teams and tools, reducing ambiguity. Maintain version control for all records, enabling you to compare iterations, rollback when necessary, and demonstrate lineage from observation to decision. Include failure modes, assumptions, and KPIs tied to corrective steps. Guarantee access controls and audit trails, so teammates can review rationales without ambiguity. Keep templates lean, update routinely, and verify outcomes through measurable metrics. Freedom here means clarity, discipline, and verifiable progress.

Preventive Measures Strategy

Preventive measures build on documented corrective actions by establishing repeatable processes that reduce recurrence and improve reliability. You’ll implement a disciplined cycle that integrates sensor calibration, maintenance schedules, and validated responses to drift or anomalies.

- Define precise thresholds and trigger points so you act before readings degrade.

- Schedule regular calibration and cross-checks to preserve accuracy across environments.

- Document every intervention, outcome, and remaining risk to tighten feedback loops.

- Review performance data quarterly to adjust counters, alerts, and preventive steps.

This approach delivers autonomy with accountability, freeing you to focus on system resilience rather than firefighting. By combining data-driven checks with clear procedures, you create a transparent, trustable basis for ongoing sensor health and decision-making.

Roles, Responsibilities, and Continuous Improvement

In this phase, roles and responsibilities are defined to guarantee every team member knows what to do and when, and continuous improvement is built into the workflow from day one. You establish a clear ownership map using a responsibility matrix, so accountability is explicit and traceable. You define decision points, escalation paths, and timebound actions to minimize ambiguity during sensor anomalies. Team collaboration becomes a structured habit: daily stand-ups, data reviews, and post-incident debriefs feed lessons into the process, not personalities. You document metrics that measure detection speed, confirmation accuracy, and remediation effectiveness, then iterate on the framework based on outcomes. Continuous improvement is anchored in a feedback loop: collect evidence, adjust procedures, and revalidate with stakeholders. This approach preserves autonomy while aligning efforts toward reliable readings, enabling you to move quickly yet deliberately when misreads occur.

Frequently Asked Questions

How Often Should Sensor Calibrations Be Audited?

Calibration frequency depends on your sensors, but a practical baseline is quarterly audits, with annual full calibration. You should schedule audits based on risk, drift history, and manufacturer guidance. Track each sensor’s metadata, establish audit guidelines, and document variance thresholds. You’ll adjust the cadence if drift exceeds limits or after maintenance. Maintain transparency, trust, and data integrity by enforcing strict audit guidelines and revisiting calibration frequency whenever performance shifts.

What Is the Rollback Procedure for Misreadings?

Did you know that 42% of misreadings are resolved by a simple rollback to the prior known-good state? For misreadings, your rollback procedure starts with documenting the exact rollback criteria, then applying a sensor reset, and revalidating readings against a baseline. If discrepancies persist, escalate to calibration checks and logged data review. Maintain a clear auditable trail, verify all affected subsystems, and re-run tests until results align with the verified sensor model.

How Are False Positives Quantified and Tracked?

You quantify false positives by comparing detections against validated ground truth, then compute rate, precision, and specificity. You establish tracking methods to log each event: timestamp, sensor, threshold, and outcome. You monitor trends with dashboards, flag drift, and audit samples periodically. You keep metrics actionable, set targets, and review of all cases to refine models. You guarantee transparency, reproducibility, and freedom to improve, while you keep false positive metrics clear and tracking methods robust.

What Are Escalation Paths for Persistent Anomalies?

Anomaly escalation follows a strict sequence: flag, verify, document, and escalate. If anomalies persist, initiate persistent monitoring, notify the on-call lead, and trigger tiered alerts with time-stamped updates. You’ll review correlations, confirm data integrity, and circle back to stakeholders. If unresolved, elevate to advanced engineering and operations, escalate to executive sponsorship, and timetable remediation. You stay proactive, precise, and data-driven, preserving freedom as you implement relentless persistent monitoring and controlled, clear anomaly escalation.

How Is Data Provenance Preserved During Fixes?

You preserve data provenance by documenting every fix, tracing changes through data lineage, and locking audit trails before you deploy. You identify impacted datasets, record timestamps, versions, and responsible engineers, then log validation results and rollback options. You preserve originals, versioned transforms, and granular metadata to show why decisions were made. You implement immutable logs, tamper-evident storage, and continuous integrity checks so your fixes remain transparent, reproducible, and auditable for future analysis.